PREVis: Perceived Readability Evaluation for Visualizations

Résumé

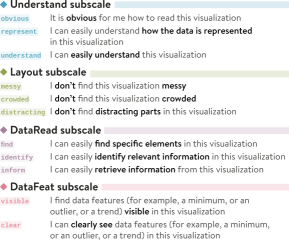

We developed and validated an instrument to measure the perceived readability in data visualization: PREVis. Researchers and practitioners can easily use this instrument as part of their evaluations to compare the perceived readability of different visual data representations. Our instrument can complement results from controlled experiments on user task performance or provide additional data during in-depth qualitative work such as design iterations when developing a new technique. Although readability is recognized as an essential quality of data visualizations, so far there has not been a unified definition of the construct in the context of visual representations. As a result, researchers often lack guidance for determining how to ask people to rate their perceived readability of a visualization. To address this issue, we engaged in a rigorous process to develop the first validated instrument targeted at the subjective readability of visual data representations. Our final instrument consists of 11 items across 4 dimensions: understandability, layout clarity, readability of data values, and readability of data patterns. We provide the questionnaire as a document with implementation guidelines on osf.io/9cg8j. Beyond this instrument, we contribute a discussion of how researchers have previously assessed visualization readability, and an analysis of the factors underlying perceived readability in visual data representations.

Fichier principal

Cabouat_2025_PPR.pdf (6.78 Mo)

Télécharger le fichier

Cabouat_2025_PPR.pdf (6.78 Mo)

Télécharger le fichier

Cabouat_2025_PPR1.jpg (942.33 Ko)

Télécharger le fichier

Cabouat_2025_PPR1.jpg (942.33 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|---|

| licence |